- IBM, Amazon, and Microsoft have all committed to not sell facial recognition to law enforcement at least temporarily.

- While activists have been campaigning for the companies to do this for years, the Black Lives Matter movement appears to have tipped the scale.

- As facial recognition becomes more widely used to catch criminals, illegal immigrants, or terrorists, there is mounting concern about how the technology might be abused.

- Each company has made subtly different promises on their sales bans.

- Visit Business Insider’s homepage for more stories.

Three of the world’s biggest tech companies have backed off selling facial recognition to law enforcement amid ongoing protests against police brutality.

IBM announced on Monday it is halting the sale of “general purpose” facial recognition. Amazon on Wednesday announced it was imposing a one-year suspension on the sale of its facial recognition software to law enforcement. Microsoft followed suit on Thursday, saying it does not sell facial recognition tech to US police forces and will not do so until legislation is passed governing the use of the technology.

It’s something of a u-turn, since activists and academics have been advocating for companies not to sell facial recognition to law enforcement on the basis it exacerbates racial injustice for years.

Facial recognition is becoming an increasingly popular tool for government agencies and law enforcement to track down criminals, terrorists, or illegal immigrants. But with the Black Lives Matter protests sweeping across the world in the wake of George Floyd’s death, there are now fresh calls for the big tech companies to stop selling the technology to police.

The argument long put forward by civil rights groups and AI experts is that facial recognition disproportionately affects people of color in two ways.

Firstly, like any policing tool operating by systemically racist societies or institutions, it will inevitably be used to target people of color more often.

Secondly, the data used to build facial recognition software ingrains it with racial bias which makes it more likely to misidentify women and people of color, which would in turn lead to more wrongful arrests. This is because the datasets used to train facial recognition algorithms are often predominantly made up of pictures of white men.

Here is a breakdown of exactly what each company has promised:

IBM

IBM was the first of the three companies to announce it was taking its foot off the pedal on facial recognition, and it's also made the most permanent commitment.

CEO Arvind Krishna made the announcement in a letter to members of Congress including Senators Cory Booker and Kamala Harris on June 8.

"IBM no longer offers general purpose IBM facial recognition or analysis software," Krishna wrote. "IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms."

He added that IBM hoped to start a "national dialogue" on the use of facial recognition by law enforcement.

The announcement was welcomed by many, including Alexandria Ocasio-Cortez.

Shout out to @IBM for halting dev on technology shown to harm society.

Facial recognition is a horrifying, inaccurate tool that fuels racial profiling + mass surveillance. It regularly falsely ID’s Black + Brown people as criminal.

It shouldn’t be anywhere near law enforcement. https://t.co/SOU670yRVc

— Alexandria Ocasio-Cortez (@AOC) June 10, 2020

Experts Business Insider spoke to said it was a move in the right direction, but that the language IBM had used in its announcement gave it some room to potentially keep selling facial recognition tech in future.

Eva Blum-Dumontet, a senior researcher at Privacy International who specializes in IBM, told Business Insider the specification that it would stop selling "general purpose" software gives the firm room to develop custom software for clients.

"We'll have to see what that means in practice. It's worth bearing in mind that a lot of the work IBM does is actually customized work for their customers, so it's possible we're not seeing the end of IBM doing facial recognition at all, they're just changing the label," said Blum-Dumontet.

Amazon

Even if IBM's announcement was cynically motivated or hedged, it was at least the first domino to fall.

Amazon announced on June 10 it was suspending sale of its facial recognition software Rekognition to law enforcement for one year.

"We've advocated that governments should put in place stronger regulations to govern the ethical use of facial recognition technology, and in recent days, Congress appears ready to take on this challenge. We hope this one-year moratorium might give Congress enough time to implement appropriate rules, and we stand ready to help if requested," Amazon said in its announcement.

The ACLU said the one-year break doesn't go far enough. "This surveillance technology's threat to our civil rights and civil liberties will not disappear in a year," Nicole Ozer, technology and civil liberties director for the ACLU, said in a press statement.

Dr. Nakeema Stefflbauer, an AI ethics policy expert, also told Business Insider that while Amazon's statement is a step in the right direction, it doesn't address what will happen to all the police departments which already have the software. "Far better than suspending further sales would be recalling the software altogether, as is commonly done with other faulty or unreliable products,"said Stefflbauer.

Andy Jassy, Amazon's head of Amazon Web Services, said in a February 2020 interview that the company does not know how many police forces have bought Rekognition.

Rekognition has come under particular scrutiny from activists in the past. In July 2018 the ACLU tested the software on members of congress. During the test Rekognition confused 28 congresspeople of color with people who had been arrested. MIT researcher Joy Buolamwini also published a paper in January 2019 which found Rekognition was consistently worse at identifying faces belonging to women and people of color.

Amazon's response was that the activists and researchers were not paying enough attention to the software's "confidence threshold," a percentage it spits out saying how sure it is it has identified the right person. As it started to sell the tool to police forces however, reports emerged that law enforcement officers weren't paying attention to the tool's confidence threshold either, and were even running composite sketches and photos of celebrities through the system in their hunt for suspects.

Microsoft

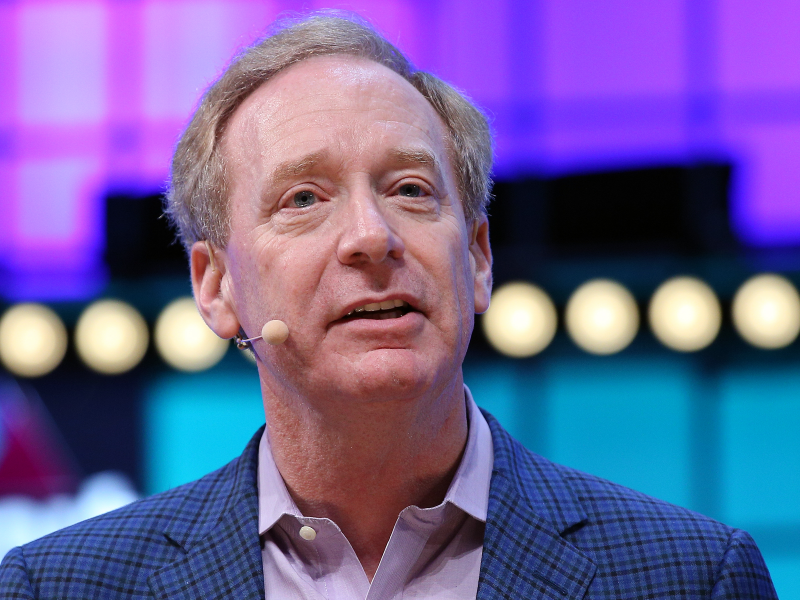

Microsoft's president Brad Smith said in an interview with the Washington Post on Thursday, June 11, that Microsoft does not sell facial recognition tech to US police forces, and committed to not sell it until a national law governing its use is brought in.

"The number one point that I would really underscore is this: we need to really use this moment to pursue a strong national law to govern facial recognition that is grounded in the protection of human rights," said Smith.

Microsoft president @BradSmi says the company does not sell facial recognition software to police depts. in the U.S. today and will not sell the tools to police until there is a national law in place “grounded in human rights.” #postlive pic.twitter.com/lwxBLjrtZL

— Washington Post Live (@PostLive) June 11, 2020

This came two days after more than 250 Microsoft employees published an open letter calling on the firm to sever business relationships with police forces more generally.

Without a legal framework, other companies could rush in to fill the gap.

Smith cautioned that giants like Microsoft, Amazon, IBM stepping back entirely from facial recognition could mean smaller companies rushing in to fill the vacuum.

"If all of the responsible companies in the country cede this market to those that are not prepared to take a stand, we won't necessarily serve the national interest or the lives of the Black and African-American people of this nation well. We need Congress to act, not just tech companies alone," he said.

Dr. Nakeema Stefflbauer voiced similar concerns that less well-known companies could start touting facial recognition to police.

"From a US perspective, these announcements confirm the serious harm that unregulated facial recognition technology in the hands of law enforcement has already caused Black and other [minority] groups to suffer," said Stefflbauer.

Dr. Steffblauer added that smaller vendors such as Clearview, Kairos, and PredPol could continue selling facial recognition tech without new laws, both in the US and the EU.

She added: "In my opinion, this is the moment when US and EU governments must take technology regulation seriously and pass comprehensive legislation: failure to do so is nothing less than giving permission for an unchecked assault on human rights."

In an email to Business Insider Joy Buolamwini, who looked at bias in Amazon's facial recognition tech, also stressed the need for a regulatory framework.

"We cannot rely on self-regulation or hope companies will choose to reign in harmful deployments of the technologies they develop," she said.

"The first step is to press pause, not just company-wide, but nationwide."

This still marks a huge shift in the tech companies' attitudes.

"Scholars and activists like Joy Buolamwini, Dr. Timnit Gebru, and Deb Raji are not alone in their push for fairness and accountability in technology that is deployed at scale. But the turnaround from big tech companies is really stunning, in light of the incredible attacks and attempts to discredit these women that their research was initially met with," said Stefflbauer.

"It should not be the responsibility of members of a discriminated group to prove that a commercial technology used on millions of people is unfairly targeting and victimizing them, but that has so far been the case."

In her email to Business Insider, Joy Buolamwini said the tech giants should throw more of their weight behind promoting racial equality.

"I also call on all companies that substantially profit from AI - including IBM, Amazon, Microsoft, Facebook, Google, and Apple- to commit at least $1 million each towards advancing racial justice in the tech industry."

"The money should go directly as unrestricted gifts to support organizations like the Algorithmic Justice League, Black in AI, and Data for Black Lives that have been leading this work for years. Racial justice requires algorithmic justice."

Not everyone is convinced the tech firms are acting in good faith.

Activist groups and scholars have largely welcomed the companies' decisions to hit pause on selling their tech to police, although not all are convinced the move is ethically motivated.

"It appears the companies are using this moment to capture goodwill so that they get to write the federal regulations on facial recognition," Jacinta Gonzalez, a senior campaign director at civil rights group Mijente told Business Insider.

"We cannot allow their lobbyists to write these rules in such a way where the companies will be allowed to profit from Black and brown communities. Facial recognition is a very dangerous technology that further oppresses a people who've long been on the receiving end of racist policing in the United States."