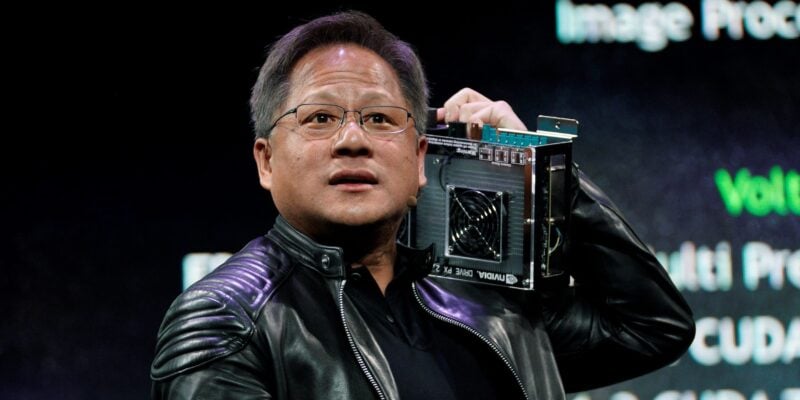

Philippe Lopez/AFP via Getty Images

- A Chinese AI emotion-recognition system can monitor facial features to track how they feel.

- Don't even think about faking a smile. The system is able to analyze if the emotion displayed is genuine.

- The company behind the system counts Huawei, China Mobile, and PetroChina among its clients, though it is unclear if these companies have purchased the emotion-recognition system for use in their offices.

- Visit Insider's homepage for more stories.

You've worked twelve hours and are feeling tired and frustrated, but you force yourself to keep a neutral expression on your face. You're too worn out to keep typing, but you can't yawn, grimace, or frown because an all-seeing eye is watching you. The eye charts your emotions, and – make no mistake – it will tell on you if you look a little too angry.

This is not the plot of a science fiction movie or the next "Black Mirror" episode. But it might already be the reality for some Chinese employees working for major tech corporations.

An AI emotion-recognition system developed by Chinese company Taigusys can detect and monitor the facial expressions of multiple people and create detailed reports on each individual to track how they're feeling. Researchers say, however, that systems like these are not only often inaccurate, but that at baseline, they're also deeply unethical.

The system was first reported on this May in an investigative piece by The Guardian. Taigusys lists multinational corporations like Huawei, China Mobile, China Unicom, and PetroChina among its major clients, though it is unclear whether these companies are licensing this particular product for use.

Insider contacted Taigusys and the 36 companies on its client list, none of whom responded to requests for comment.

How does it work?

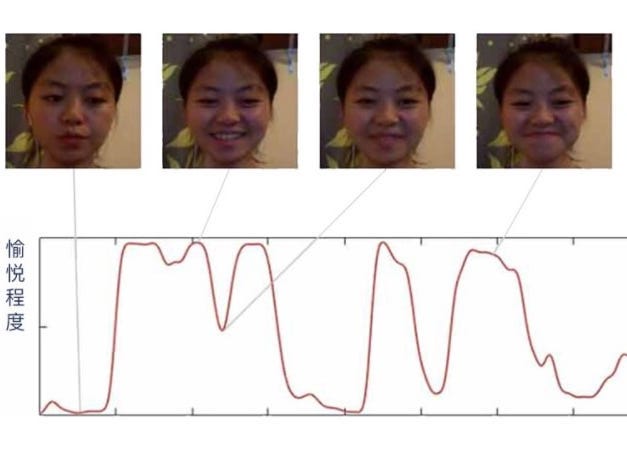

Taigusys

Taigusys claims on its website that its system helps to "address new challenges" and can "minimize the conflicts" posed by emotional, confrontational behavior.

This is done through an AI system, which can run the expressions of multiple individuals through an assessment at once. An algorithm then assesses each individual's facial muscle movements and biometric signals and evaluates them on several scales outlined by Taigusys.

"Good" emotions the program looks for include happiness, surprise, and feeling moved by something positive. The AI system also checks the person's face for negative emotions, like disgust, sorrow, confusion, scorn, and anger. Neutral emotions - like how "focused" one is on a task - are considered too.

Taigusys claims that its software is so nuanced it can detect when someone is faking a smile.

The emotion-recognition software says it can also generate reports on individuals to recommend them for "emotional support" if they exceed the recommended markers for "negative emotions."

Taigusys

"Based on the analysis of one's facial features, the system can calculate how confrontational, stressed, or nervous an individual is, among other metrics. We can also analyze the person's emotional response and figure out if they are up to anything suspicious," said the company in its product description of the system.

Activists urge caution with deploying such programs, highlighting ethics and human rights issues

Human rights activists and tech experts Insider spoke to sounded the alarm bell about deploying such programs, noting that emotion-recognition systems are fundamentally rooted in unethical, unscientific ideas.

Vidushi Marda, senior program officer at British human rights organization Article 19, and Shazeda Ahmed, Ph.D. candidate at the UC Berkeley School of Information, told Insider that their joint research paper on China's emotion-recognition market published this January uncovered a staggering 27 companies in China that were working on developing emotional recognition programs, including Taigusys.

"If such technology is rolled out, it infringes on the ethical and legal rights of employees in the workplace. Even in the premises of a privately-owned workplace, there's still an expectation of privacy and dignity, and the right of employees to act freely and think freely," Marda added.

She noted that the emotion-recognition systems are built on pseudoscience and based on the tenuous and scientifically unproven assumption that facial expressions are linked to one's inner emotional state.

"There is no way to 'optimize' these technologies to be used ethically because the assumptions that they are built upon are themselves unethical," said Marda.

What's more, posits Ahmed, intensive Panoptic surveillance imposed by such technology could lead to self-censoring and policing their behavior to game the system.

Daniel Leufer, Europe policy analyst at digital civil rights non-profit Access Now, told Insider that even if it could work, despite its shaky scientific basis, emotional recognition programs constitute a "gross violation" of several human rights, including one's right to privacy, free expression, and freedom of thought.

"Worst of all, all of these violations potentially occur even if emotion recognition is not scientifically possible. The very fact that people believe it is, and create and deploy systems claiming to do it, has real effects on people," Leufer said.

"A society that monitors our emotions is a society that will reward some and penalize others. Are certain emotions predictive of crime or low productivity?" noted Rob Reich, professor of political science at Stanford and co-author of a forthcoming book on ethics and technology, "System Error: Where Silicon Valley Went Wrong and How We Can Reboot."

"Big Brother will be watching and equipped to enforce an emotional regime. The result is a systematic violation of privacy and degradation of humanity," Reich said.

"It is never ethical for other people to use AI systems to surveil people's emotions, especially not if those doing the surveillance are in a position of power in relation to those surveilled, like employers, the police, and the government," Leufer added.

This may be why, thus far, prisons are among the only workplaces that have openly admitted to using the program.

The Guardian spoke to Chen Wei, a general manager at the Taigusys, who told them that the company's systems are installed in 300 prisons and detention centers around China. The system is connected to around 60,000 cameras in such facilities and has helped keep prisoners "more docile," said Chen.

With the system, the authorities can monitor people in real-time, 24 hours a day.

"Violence and suicide are very common in detention centers. Even if police nowadays don't beat prisoners, they often try to wear them down by not allowing them to fall asleep. As a result, some prisoners will have a mental breakdown and seek to kill themselves. And our system will help prevent that from happening," Chen told the Guardian.

Beyond the ethical implications of emotion recognition software, many doubt the technology is refined enough to paint an accurate picture

Desmond Ong, assistant professor at the department of information systems and analytics at the National University of Singapore's School of Computing, believes that the software could potentially help with "identifying dangerous life-and-death situations like fatigued, inebriated, or mentally unwell pilots and train drivers."

But, he added, "it could also be used to unfairly penalize or inhumanely optimize employee performance, like making 'empathy' a key performance indicator for call center employees."

Ong noted that systems like the one developed by Taigusys also undermine the complexity of human emotional expression by reducing people the expression of basic emotions.

"Simply don't use it, don't develop it, and don't fund it. It's scientifically shaky, it violates a range of fundamental rights, and it's also just downright creepy," he said.

Besides, it's unclear how well AI would be able to understand how complex emotions really are.

Sandra Wachter, associate professor and senior research fellow at the University of Oxford's Oxford Internet Institute, told Insider it would be unlikely for an algorithm to accurately understand humans' highly complex emotional state via facial expressions alone.

She noted, for example, how women are often socialized to smile politely, which may not be a sign of happiness or agreement.

"Applying emotion-recognition software to employees also poses a threat to diversity because it forces people to act in a way that adheres to an algorithmic and artificial 'mainstream' standard, and so infringes on peoples' autonomy to express themselves freely," Wachter said.

"We can see a clash with fundamental human rights, such as free expression and the right to privacy," she added.